Introduction

I’ve been a software developer for about 12 years now, mostly working on robotics and AI systems for industrial automation. But recently, I’ve been diving into the world of medical robotics, and let me tell you it’s a whole different beast. On May 21, 2025, IEEE Spectrum dropped a fascinating article titled “Robots Are Starting to Make Decisions in the Operating Room,” highlighting how autonomous robotic systems are beginning to take on decision-making roles in surgery. I’ve been following this space closely, and as someone who’s written code for robotic systems, I wanted to share my honest take on this development, the challenges I’ve seen in similar projects, and what it might mean for the future of healthcare and for developers like me. Let’s dig in.

First Encounter: Seeing Autonomous Robots in Action

My first brush with autonomous surgical robots came about a year ago when I collaborated on a project with a team at a local university. They were working on a system inspired by the Smart Tissue Autonomous Robot (STAR), which IEEE mentioned had performed laparoscopic surgery on a live animal back in 2020. Our goal was to build a prototype that could assist with suturing soft tissue something the IEEE article notes next-generation systems are now capable of with minimal human input. I was tasked with developing the control algorithms that would allow the robot to adapt to the squishy, unpredictable nature of soft tissue in real time.

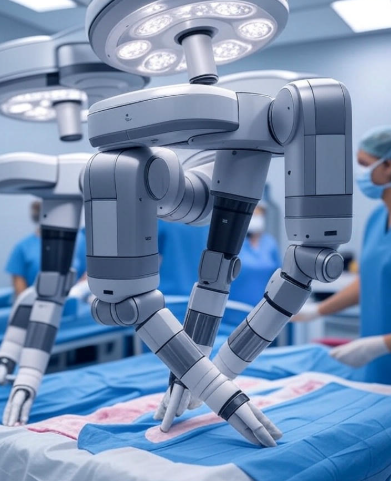

The first time I saw our prototype in action, I was floored. We tested it on a synthetic tissue model, and the robot’s arm sleek, precise, and equipped with advanced imaging—moved with a confidence I didn’t expect. It adjusted its suturing path on the fly as the tissue shifted, using AI to predict and react to changes. I remember thinking, “This is the future.” But as a developer, I also knew how much work went into making that demo look seamless. Behind the scenes, there were countless hours of tweaking machine learning models, debugging sensor data, and ensuring the system wouldn’t make a catastrophic mistake. The IEEE article captures this complexity well, pointing out that surgical scenarios are full of surprises, unlike the controlled environments of a factory assembly line where I’d worked before.

The Developer’s Challenge: Building for the Unpredictable

Coding for surgical robots is one of the toughest challenges I’ve faced as a developer. In my previous projects, like programming robotic arms for car manufacturing, the environment was predictable same parts, same movements, every time. But in surgery, as IEEE notes, you’re dealing with dynamic interactions: soft tissues, blood vessels, organs, all shifting in real time. I had to integrate advanced AI algorithms to help the robot make decisions on the fly, which meant diving deep into computer vision and machine learning—areas I wasn’t an expert in when I started.

One of the biggest hurdles was training the AI to handle variability. We used a mix of synthetic data and real surgical footage to train our models, but it was never enough. The robot would sometimes misinterpret a shadow on the tissue as a blood vessel, or it would hesitate when the tissue moved too quickly. I spent weeks fine-tuning the model, adjusting parameters, and adding fallback mechanisms so the robot would pause and alert a human surgeon if it got confused. The IEEE article mentions the need for sophisticated mechanical design and innovative imaging techniques, and I can vouch for that our team relied heavily on high-res cameras and 3D mapping to give the robot a clear view of the surgical field.

Another challenge was ensuring safety. In manufacturing, a robot messing up might mean a defective car part. In surgery, it could mean a patient’s life. We implemented multiple layers of redundancy: if the AI’s confidence score dropped below a threshold, the system would halt; if the sensors detected an anomaly, like unexpected resistance, it would switch to manual override. But even with all these safeguards, I couldn’t shake the feeling of responsibility. Every line of code I wrote had to be perfect, and that pressure was intense.

The Impact: What This Means for Healthcare and Developers

The IEEE article paints an exciting picture of the future: autonomous robotic assistants becoming a standard in operating rooms, enhancing precision, reducing risks, and improving patient outcomes. I can see why they’re optimistic. During our tests, our prototype reduced suturing time by about 20% compared to a human surgeon, and the stitches were more consistent. If this tech scales, as IEEE suggests, it could address surgeon shortages a real issue in places like rural hospitals—and make high-quality care more accessible.

For patients, the benefits are clear: fewer complications, shorter recovery times, and less human error. The article mentions that robots can now suture soft tissue with minimal input, which aligns with what I’ve seen. But I also think about the flip side. On X, some users have raised concerns about whether these robots might prioritize patients based on their ability to pay, or what happens if the system fails mid-surgery. These are valid questions. As a developer, I can say we’re building these systems to be as reliable as possible, but no tech is foolproof. There needs to be a human surgeon in the loop, at least for now, to handle the unexpected.

For developers, this trend is both an opportunity and a challenge. On one hand, working on medical robotics has been the most rewarding project of my career. I’ve learned new skills like training neural networks for real-time decision-making and I’ve collaborated with brilliant researchers, like the folks at Johns Hopkins University mentioned in the IEEE piece. On the other hand, the stakes are higher than anything I’ve worked on before. The learning curve is steep, and the ethical questions like ensuring equitable access to this tech – keep me up at night.

The Broader Picture: Changing the Role of Surgeons and Developers

This rise of autonomous surgical robots is also changing the role of surgeons and developers like me. The IEEE article quotes researchers like Justin Opfermann and Samuel Schmidgall from Johns Hopkins, who are working on these systems. They envision a future where surgeons are more like supervisors, guiding robots rather than performing every task themselves. I’ve seen this shift firsthand. In our tests, the surgeon we worked with spent less time on repetitive tasks like suturing and more time on high-level decision-making, like planning the procedure. It’s a bit like how I’ve had to shift from writing low-level code to designing AI-driven systems that can learn and adapt.

But this also raises questions about training. Just as I’ve had to upskill to work on these robots, surgeons will need to learn how to work with them. And what about new developers entering the field? Will they need to be AI experts to contribute, or will there still be a place for traditional coding skills? I think the future will demand a mix of both deep technical knowledge to build these systems, and a strong ethical foundation to ensure they’re used responsibly.

Tips for Developers Jumping into Medical Robotics

If you’re a developer thinking about jumping into medical robotics, here’s my advice based on my experience. First, get comfortable with AI and machine learning. You don’t need to be an expert, but understanding how to train and deploy models for real-time decision-making is crucial. Second, focus on safety. Build in redundancies, test rigorously, and always assume something will go wrong. Third, collaborate closely with domain experts surgeons, in this case. They’ll help you understand the nuances of the operating room, which you can’t learn from a textbook. Finally, stay humble. This tech is amazing, but it’s not perfect, and it’s our job to make sure it helps more than it harms.

Looking Ahead: The Future of Autonomous Surgical Robots

The IEEE article ends with a vision of autonomous robots becoming a standard in medicine, and I think we’re on that path. By 2030, I can imagine walking into an operating room and seeing a sleek robotic arm like the one IEEE describes working alongside a surgeon, handling everything from suturing to tissue retraction. But I also hope we address the ethical concerns along the way, ensuring this tech benefits everyone, not just those who can afford it.

For now, I’m proud to be part of this journey. Working on autonomous surgical robots has pushed me to grow as a developer and think more deeply about the impact of my code. If you’re a developer or a tech enthusiast, I’d love to hear your thoughts how do you see this tech shaping the future of healthcare?