Artificial Neural Networks (ANNs) are the backbone of modern machine learning and artificial intelligence. Inspired by the structure and function of the human brain, ANNs have revolutionized fields like computer vision, natural language processing, and predictive analytics. Among the most powerful variants of ANNs are Multi-Layer Neural Networks, which have the ability to learn complex patterns and representations from data. In this blog post, we’ll dive into how multi-layer neural networks work, their architecture, and why they are so effective.

What is a Multi-Layer Neural Network?

A multi-layer neural network is a type of artificial neural network that consists of more than one layer of neurons (also called nodes or units) between the input and output layers. These additional layers are called hidden layers, and they enable the network to learn hierarchical representations of data. The more layers a network has, the deeper it is, which is why multi-layer neural networks are often referred to as deep neural networks (DNNs).

The simplest multi-layer network has:

- Input Layer: Receives the raw input data.

- Hidden Layer(s): Transforms the input data into higher-level features.

- Output Layer: Produces the final prediction or classification.

Key Components of a Multi-Layer Neural Network

Neurons (Nodes):

- Each neuron receives input, applies a mathematical operation, and produces an output.

- Neurons in one layer are connected to neurons in the next layer via weights.

Weights and Biases:

- Weights determine the strength of the connection between neurons.

- Biases allow the network to shift the activation function, enabling better fitting of the data.

Activation Functions:

- Activation functions introduce non-linearity into the network, allowing it to learn complex patterns.

- Common activation functions include ReLU (Rectified Linear Unit), Sigmoid, and Tanh.

Layers:

- Input Layer: Passes the input data to the network.

- Hidden Layers: Perform feature extraction and transformation.

- Output Layer: Produces the final result (e.g., a classification or regression value).

How Does a Multi-Layer Neural Network Work?

The operation of a multi-layer neural network can be broken down into two main phases: forward propagation and backpropagation.

1. Forward Propagation

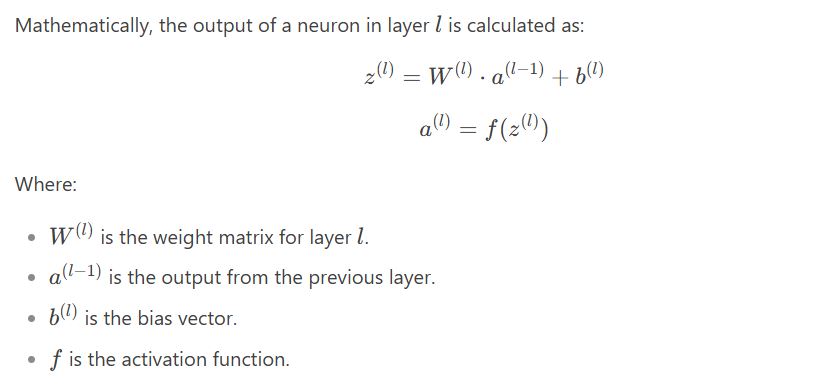

During forward propagation, input data is passed through the network layer by layer to produce an output. Here’s how it works:

- The input data is fed into the input layer.

- Each neuron in the hidden layers computes a weighted sum of its inputs, adds a bias, and applies an activation function.

- This process continues until the output layer produces the final prediction.

2. Backpropagation

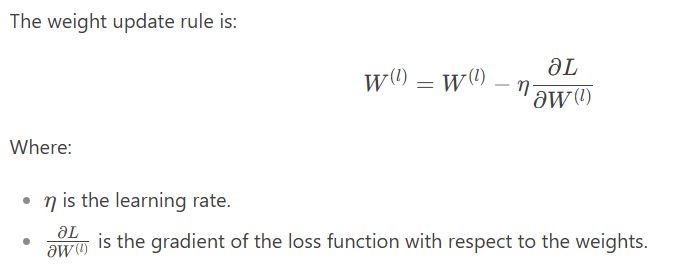

Backpropagation is the process of adjusting the weights and biases to minimize the error between the predicted output and the actual output. Here’s how it works:

- The error is calculated using a loss function (e.g., Mean Squared Error for regression or Cross-Entropy Loss for classification).

- The gradient of the loss function with respect to each weight and bias is computed using the chain rule of calculus.

- The weights and biases are updated using an optimization algorithm like Gradient Descent or its variants (e.g., Adam, RMSprop).

Why Are Multiple Layers Important?

Multi-layer neural networks excel at learning hierarchical features from data. Here’s why:

Feature Hierarchy:

- Early layers learn simple features (e.g., edges in an image).

- Deeper layers learn more complex features (e.g., shapes, objects).

Non-Linearity:

- Multiple layers with non-linear activation functions allow the network to model complex, non-linear relationships in the data.

Representation Learning:

- Hidden layers automatically learn useful representations of the input data, reducing the need for manual feature engineering.

Challenges in Training Multi-Layer Neural Networks

While multi-layer neural networks are powerful, they come with challenges:

Vanishing/Exploding Gradients:

- Gradients can become too small or too large, making training difficult. Techniques like weight initialization and normalization help mitigate this.

Overfitting:

- Deep networks can memorize the training data instead of generalizing. Regularization techniques like dropout and weight decay are used to prevent this.

Computational Cost:

- Training deep networks requires significant computational resources and time.

Applications of Multi-Layer Neural Networks

Multi-layer neural networks are used in a wide range of applications, including:

- Image Recognition: Convolutional Neural Networks (CNNs) for object detection and classification.

- Natural Language Processing: Recurrent Neural Networks (RNNs) and Transformers for text generation and translation.

- Speech Recognition: Deep networks for converting speech to text.

- Predictive Analytics: Regression and classification tasks in finance, healthcare, and more.

Conclusion

Multi-layer neural networks are a cornerstone of modern machine learning, enabling machines to learn complex patterns and make intelligent decisions. By leveraging multiple layers of neurons, these networks can automatically extract hierarchical features from data, making them incredibly versatile and powerful. However, training deep networks requires careful tuning and optimization to overcome challenges like vanishing gradients and overfitting.

As research in deep learning continues to advance, multi-layer neural networks will only become more efficient and capable, unlocking new possibilities in AI and beyond. Whether you’re a beginner or an expert, understanding how these networks work is essential for staying at the forefront of machine learning.

If you enjoyed this post, feel free to share it and leave a comment below. Let’s keep the conversation going! 🚀