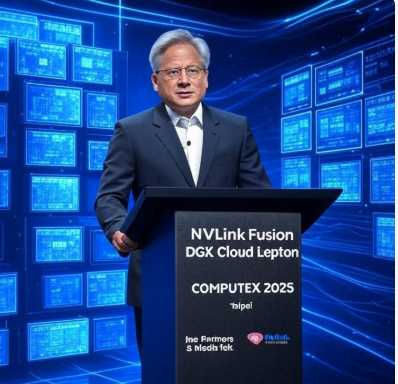

On May 19, 2025, Nvidia CEO Jensen Huang took the stage at Computex 2025 in Taipei, Taiwan—Asia’s largest electronics conference—to unveil a series of groundbreaking announcements aimed at solidifying Nvidia’s position as the leader in AI computing. As reported by CNBC, these updates, including the introduction of the NVLink Fusion program and the NVIDIA DGX Cloud Lepton, signal Nvidia’s strategic pivot to address rising competition and evolving market demands. With major tech players like Microsoft, Google, and Amazon developing their own custom AI chips, and geopolitical tensions influencing global supply chains, Nvidia’s latest moves are both a bold assertion of dominance and a response to an increasingly complex landscape. This blog post dives into Nvidia’s announcements, their implications for the AI ecosystem, and the challenges the company faces moving forward.

NVLink Fusion: A Strategic Opening of Nvidia’s Ecosystem

The centerpiece of Nvidia’s Computex 2025 announcements was the NVLink Fusion program, a significant departure from the company’s traditionally closed ecosystem. NVLink, Nvidia’s high-speed interconnect technology, has historically been exclusive to Nvidia’s GPUs and CPUs, enabling seamless data exchange within its own hardware. However, NVLink Fusion now allows customers and partners to integrate non-Nvidia CPUs and GPUs with Nvidia’s products, enabling the creation of semi-custom AI infrastructure. Huang emphasized this shift during his keynote, stating, “NVLink Fusion is so that you can build semi-custom AI infrastructure, not just semi-custom chips.”

This move is a direct response to the growing trend of custom silicon development by Nvidia’s largest customers—who also happen to be its biggest competitors. Companies like Microsoft, Google, and Amazon have been investing heavily in their own AI chips, such as Microsoft’s Azure Maia, Google’s TPUs, and Amazon’s Trainium, to reduce dependency on Nvidia’s GPUs. By opening up NVLink to third-party chips, Nvidia is positioning itself as a central player in hybrid AI systems, even in data centers that don’t exclusively use its hardware. Partners like MediaTek, Marvell, Alchip, Astera Labs, Synopsys, and Cadence have already signed on, with customers like Fujitsu and Qualcomm set to leverage the technology in their AI data centers.

Analysts see this as a double-edged sword. On one hand, NVLink Fusion broadens Nvidia’s industry footprint, fostering collaboration with custom CPU and ASIC developers and potentially capturing a larger share of the data center market. On the other hand, it risks reducing demand for Nvidia’s own CPUs, as customers may opt for cheaper or more specialized alternatives. Despite this, the added flexibility strengthens Nvidia’s GPU-based solutions against emerging architectures, ensuring the company remains a linchpin in AI computing.

DGX Cloud Lepton: Democratizing Access to AI Compute Power

Another major announcement was the launch of the NVIDIA DGX Cloud Lepton, an AI platform designed to connect developers with a global network of GPU resources. Described by Nvidia as a “compute marketplace,” DGX Cloud Lepton aims to address the critical challenge of securing reliable, high-performance GPU capacity for AI development. With tens of thousands of GPUs available through cloud providers, the platform unifies access to Nvidia’s compute ecosystem, enabling developers to train and deploy AI models more efficiently.

This initiative comes at a time when demand for AI compute resources is skyrocketing. Tech giants like Meta, Amazon, Alphabet, and Microsoft are projected to spend over $300 billion on AI technologies and data center buildouts in 2025 alone, driven by the need to scale AI workloads. However, supply shortages have constrained growth, with cloud providers like Microsoft reporting weaker-than-expected Azure performance due to limited GPU availability. DGX Cloud Lepton seeks to alleviate these bottlenecks by creating a centralized hub for GPU access, potentially accelerating innovation across industries.

For smaller developers and startups, this platform could be a game-changer, democratizing access to the kind of compute power previously reserved for hyperscalers. However, questions remain about pricing, accessibility, and whether Nvidia can truly meet the global demand for GPUs without further straining its supply chain.

Expanding in Taiwan: A New AI Supercomputer with Foxconn

Nvidia also announced plans to deepen its presence in Taiwan, a critical hub for global semiconductor manufacturing. The company revealed it will establish a new office in the region and collaborate with Foxconn—officially Hon Hai Technology Group, the world’s largest electronics manufacturer—to build an AI supercomputer. This project aligns with Nvidia’s broader push to onshore more of its manufacturing, a strategy influenced by recent U.S. policies under President Donald Trump, who has imposed tariffs to encourage domestic production.

The Taiwan supercomputer project underscores Nvidia’s commitment to strengthening its supply chain resilience, especially amid U.S.-China trade tensions. In April 2025, Huang emphasized the importance of manufacturing in the U.S., announcing a $500 billion investment in AI infrastructure over the next five years, including AI supercomputer production in Texas with Foxconn. The Taiwan initiative complements this effort, leveraging the region’s expertise in electronics to support Nvidia’s global ambitions.

Competitive Pressures and Geopolitical Challenges

While Nvidia’s announcements at Computex 2025 reinforce its leadership, the company faces significant challenges. Competitors like AMD, Intel, and custom silicon developers are intensifying their efforts to capture market share in AI computing. Moreover, cloud providers—some of Nvidia’s largest customers—are increasingly self-sufficient, building their own chips to power AI workloads. This dual role as both partner and competitor creates a delicate balancing act for Nvidia.

Geopolitically, Nvidia is navigating a minefield. The U.S. government’s export controls on advanced AI chips, initially implemented under the Biden administration and now modified under Trump, have restricted Nvidia’s ability to sell its most cutting-edge products to countries like China. In April 2025, Huang warned that China is “not behind” in AI development, citing Huawei as a formidable rival. Despite these restrictions, Nvidia has secured deals to supply chips to regions like Saudi Arabia, where it will provide 18,000 Blackwell chips for a 500-megawatt data center, highlighting the global demand for its technology.

However, scrutiny over Nvidia’s compliance with export laws is intensifying. The U.S. House Select Committee on the Chinese Communist Party has launched an investigation into whether Nvidia’s chips were used by China’s DeepSeek to develop AI models, potentially violating U.S. regulations. Nvidia has denied sending GPU designs to China, but the controversy underscores the geopolitical risks the company faces as a leader in AI.

Ethical and Market Implications

Nvidia’s dominance in AI computing raises broader questions about the concentration of power in the tech industry. As AI becomes increasingly integral to everything from healthcare to defense, the company’s role in shaping the future of technology carries significant ethical implications. Critics argue that Nvidia’s control over AI infrastructure could stifle competition, while its involvement in global markets tied to military applications—such as in Saudi Arabia—raises concerns about the use of AI in conflict zones.

From a market perspective, Nvidia’s stock has experienced volatility, dropping over 20% in 2025 after tripling in value the previous year. While NVLink Fusion and DGX Cloud Lepton are poised to drive growth, investors remain cautious about the transition to Nvidia’s next-generation Blackwell chips, which could lead to a temporary lull in demand for older models like the H100. Nevertheless, analysts remain optimistic, with some predicting that Nvidia’s revenue could triple over the next few years as the AI boom continues.

Conclusion: Nvidia’s Vision for the Future of AI

Nvidia’s Computex 2025 announcements reflect a company at the peak of its influence, yet acutely aware of the challenges ahead. By opening up NVLink, launching DGX Cloud Lepton, and expanding its global footprint, Nvidia is positioning itself as the backbone of the AI revolution. However, competition, geopolitical tensions, and ethical concerns will test its ability to maintain its lead. As Jensen Huang stated, AI is an “infinite race,” and Nvidia’s latest moves are a testament to its determination to stay ahead. The question now is whether the company can balance innovation with responsibility in an increasingly complex world.